We believe that also our webinar, organized with company AAEON, has certainly convinced you. Marlo Banganga is Senior Business Development Manager at AAEON. During the webinar, he explained some important information about machine vision and artificial intelligence. Today, the question of introducing machine vision or AI into a workflow is no longer discussed. It just goes without saying and what is being addressed is how quickly it is to be implemented, to what extent, and where the benefits of these technologies can be used.

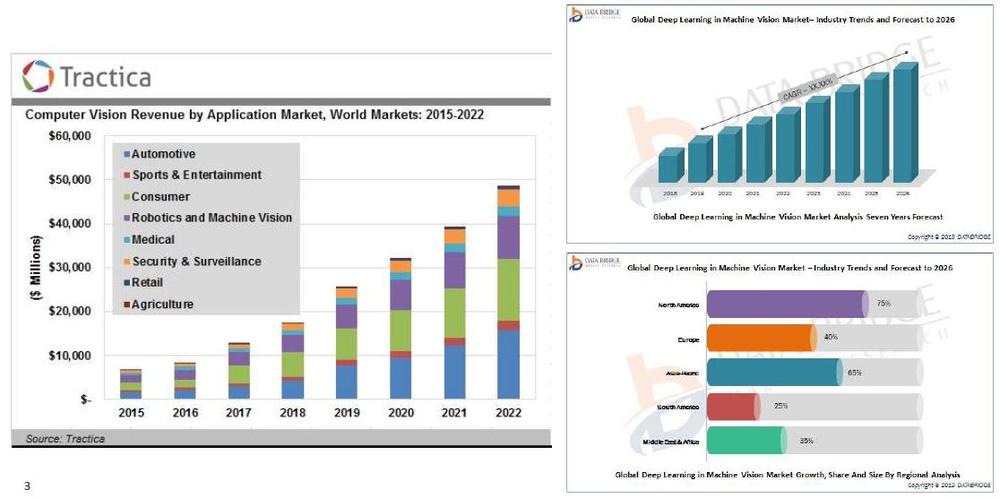

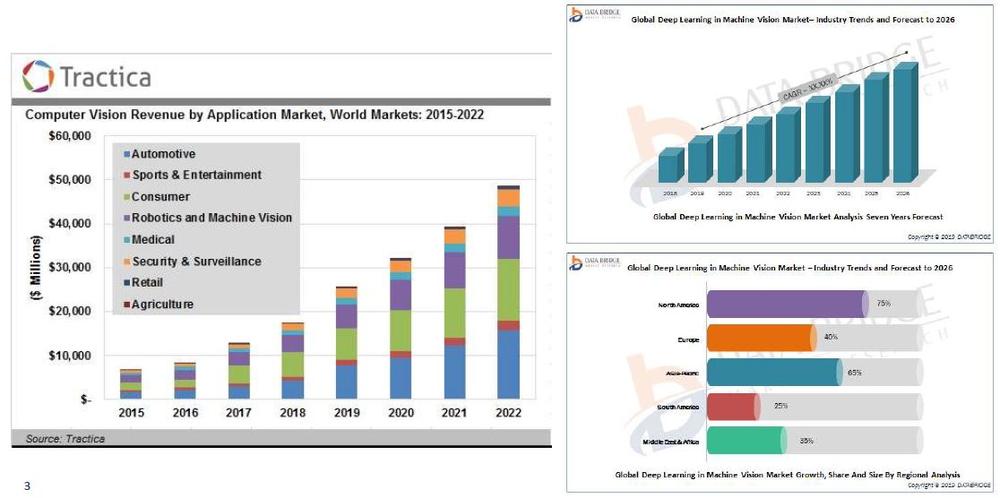

On a global scale, the use of machine vision increases from year to year (pic. 1). In 2015 revenue was calculated in billion USD, the estimated revenue in 2022 is getting close to 50 billion USD.

pic.1 Computer Vision Revenue

The most common tasks for machine vision in manufacturing are:

- identification

- accurate and fast contactless measurement

- completeness inspection

- check for correct placement and quality

- navigating robots in space

Every type of task places specific requirements on embedded systems for machine vision. AAEON covers the entire field, from compact systems to deal with simple tasks such as object detection, to systems with Intel Xeon processor and slots for image processing accelerators based on GPU, VPU, or FPGA. Check what advice Marlo has given to the participants in the webinar recording.

The second part of the presentation focused on the principles of neural networks and models for typical applications:

- image classification

- objects detection

- face recognition/detection

- video classification

- image segmentation

- speech recognition

Neural networks imitate the functioning of the human brain, therefore, they are often called “artificial intelligence”.

The first step is training the model. In this phase, the input data and the correct output data are presented to the neural network. During the training, the neural network changes its internal parameters in order to generate output data with an error that is less than required. Model training is a task that is very demanding on computing power, and thus it typically runs on leased hardware in data centers.

Thanks to the constant increase of CPU and GPU computing power while reducing power consumption and availability of chips specifically designed for neural networks, "artificial intelligence" inference can be already deployed even locally, right where data is produced. This use is described with a term that is quite difficult to translate - AI@Edge.

AAEON is focused on manufacturing hardware just for this purpose, we wrote about it in our article "Artificial Intelligence on the Edge of Your Network“. What does it look like in practice and what do you need? Learn more in our webinar record.

Please, fill in a few details about yourself before we make the webinar record available to you. Thank you.

Do you like our articles? Do not miss any of them! You do not have to worry about anything, we will arrange delivery to you.